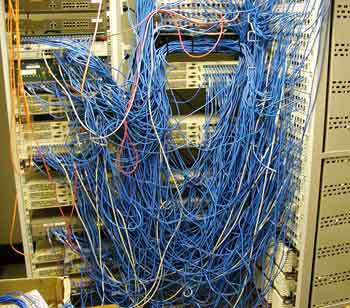

No, my network looks nothing like that, but it has felt a little bit like that trying to work out why I was getting intermittent connectivity to the new Ubuntu 18.04 VM’s I’d created here. The fix to my network woes was pretty simple when I’d worked out what was causing the problem.

When I’d initially set them up and briefly tested, everything seemed to be working fine, but over the next few days, I started having some intermittent problems connecting back into some of the services running across the ONLYOFFICE and Docker/Portainer VMs.

One of the first things I tested (as it was set-up differently on FreeNAS0) was the IP addresses. They were just assigned by DHCP, whereas on FreeNAS1 they had been static, so I thought I’d try to fix that. Turns out in Ubuntu 18.04 there’s a slightly different way of doing that, which is pretty neat. You simply edit the .yaml file in /etc/netplan, with something like this:

network:

version: 2

renderer: networkd

ethernets:

enp0s3:

dhcp4: no

addresses: [192.168.168.xx/24]

gateway4: 192.168.168.1

nameservers:

addresses: [8.8.8.8,8.8.4.4]

and then netplan apply. Unfortunately, that wasn’t the problem 🙁

I can admit my weaknesses, and ‘networking’ is certainly one of them! I understand enough to get things working, but not enough to fix problems when they occur. I really need to fix that in 2020! I tried a few other things, but nothing seemed to work and if anything was making things worse as I started to experience some issues with some jails, although to a much lesser degree.

I was down to running just one VM and was pretty convinced the problem was between the ssl-proxy jail (I was seeing the certificate in the browser when trying to connect) and the VM/jail (as these were all working inside the network using their IP address) but I’d pretty much run out of ideas what to try. I called for help! The good old IXsystems community forum.

In the meantime, I’d started to think I might need to move the jails back to the old FreeNAS1 machine, but I’d made enough changes that I thought replicating everything back might be the easiest option. Anyway, I was back looking at FreeNAS1 and luckily noticed that 2 of the jails were running! I’d stopped them all when the transfer to FreeNAS0 was complete, so they shouldn’t have been.

The fact one of those jails was my ssl-proxy jail made me realise the problem – they were both ‘trying’ to use the same IP address! I know enough about networks to know they don’t like that, so I stopped the rogue jails and tried to work out why they’d started up.

I found the culprit pretty quickly. I have a few Cron Tasks setup on FreeNAS. Most of them are for running scripts to notify me of ZPOOL, SMART and UPS statuses a couple of times a month, and save a nightly backup of the config database. But two were for running a weekly certbot script to renew my Letsencrypt certificates (starting the ssl-proxy jail) and another to check Calibre is running (starting the calibre jail). Both of these Cron Tasks have now been deleted on FreeNAS1.

So far so good, and I’ve not has a single issue accessing any of the external services running inside a jail or VM. I have started the replication of FreeNAS0 back to FreeNAS1, so hope that in a few days everything is set up exactly as it should be, just in time for Christmas 😀

Trackbacks/Pingbacks